Believe it or not, there’s some great engineering and sportsmanship behind this:

We’re trying to create low-latency, high-fidelity, large-scale multi-user experiences with high-frequency sensors and displays. It’s at the edge of what’s possible. The high-end Head Mounted Displays that are coming out are pretty good, but even they have been dicey so far. The hand controllers have truly sucked, and we’ve been basing everything on the faith that they will get better. But even our optimism had waned on the optical recognition of the LeapMotion hand sensor. We made it work as well as we could, and then left it to bit-rot.

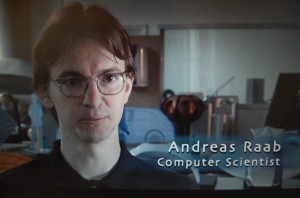

But yesterday LeapMotion came out with a new version of their API library, compatible with the existing hardware. Brad is the engineer shown above, and no one knows more than him how hard it is to make this stuff work. He was so sure that the new software would not “just work”, that he offered a bottle of Macallen 18 year old scotch to anyone who could do so. Like the hardworking bee that doesn’t know it can’t possibly fly, our community leader, Chris, is not an engineer and and just hooked it up.

In just minutes he made this video to show Brad, who works from a different office.

True to his word, Brad immediately went online and ordered the scotch, to be sent to Chris. Brad then dug out his old Leap hardware from the drawer next to his CueCat and made the more articulate version above.

We sent it to few folks, including Stanford’s VR lab, which promptly tweeted it, with the caption, “One small step for Mankind? Today @highfidelityinc we saw 1st networked avatar with fingers”.

So now we have avatars with full body IK driven by 18-degree of freedom sensors, plus optical tracking of each finger, facial features and eye gaze, all networked in real time to scores of simultaneous users, with physics. In truth, we still have a lot of work to do before anyone can just plug this stuff in and have it work, but it’s pretty clear now that this is going to happen!

One more dig: Apple has long said that they can only make things work at the leading edge by making the hardware and software together, non-interoperable to anyone else. Oculus has said that networked physics is too hard, and open platforms are too hard. Apple and Oculus make really great stuff that we love. We make only open source software, and we work with all the hardware, on Windows, Mac, and Linux.