This week, some of our early adopters got together for a party in virtual reality. One amazing thing is how High Fidelity mixes artist-created animations with sensor-driven head-and-hand motion. This is done automatically, without the user having to switch between modes. Notice the fellow in the skipper’s cap walking. His body, and particularly his legs, are being driven by an artist-created animation, which in turn is being automatically selected and paced to match either his real body’s physical translation, or game-controller-like inputs. Meanwhile, his head and arms are being driven by the relative position and rotation of his HMD and the controller sticks in his hands(*).

So, dancing is allowed.

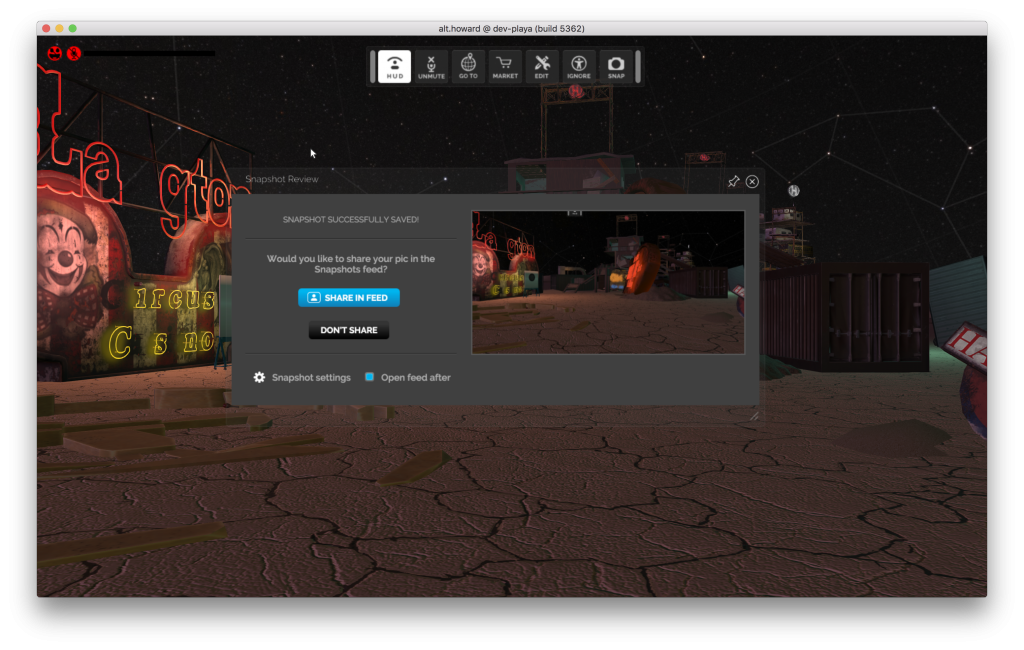

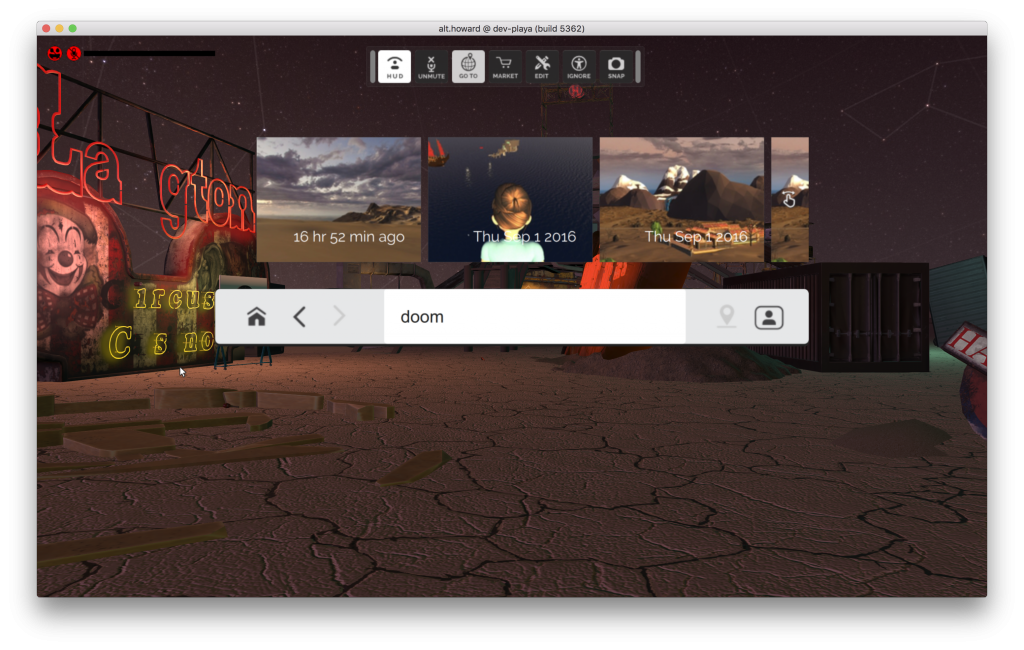

But the system is also open to realtime customization by the the participants. Some of the folks at the party spontaneously created drinks and hats and such during the party and brought them in-world for everyone to use. A speaker in the virtual room was held by a tiny fairy that lip-sync’d to the music. One person brought a script that altered gravity, allowing people to dance on the ceiling.

*: Alas, if the user is physically seated at a desk, they tend to hold their hand controllers out in front of them. You can see that with the purple-haired avatar.